This blog touches on what data means to us all and how we can continue to make sense of it, look for insights that lead to us being able to take meaningful actions and adapt to the continually changing nature of the data we produce.

Image via humansofdata.com

Data in Flux

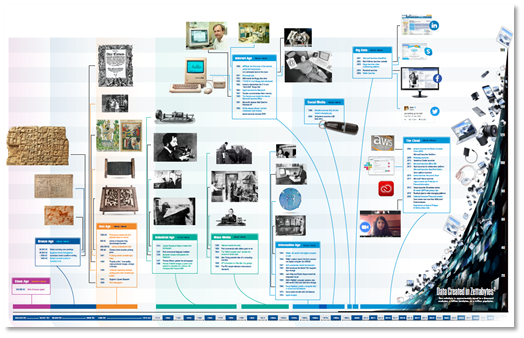

We have seen data change significantly over the last 70 years; from noughts and ones to abstract data.

Data has changed to such an extent that not only are the structures different but there are more types of data being generated.

To understand and find some meaning in the data, we need to interpret it in a way that can be understood so that it can be used to derive some sort of outcome or action.

A Brief History of Data

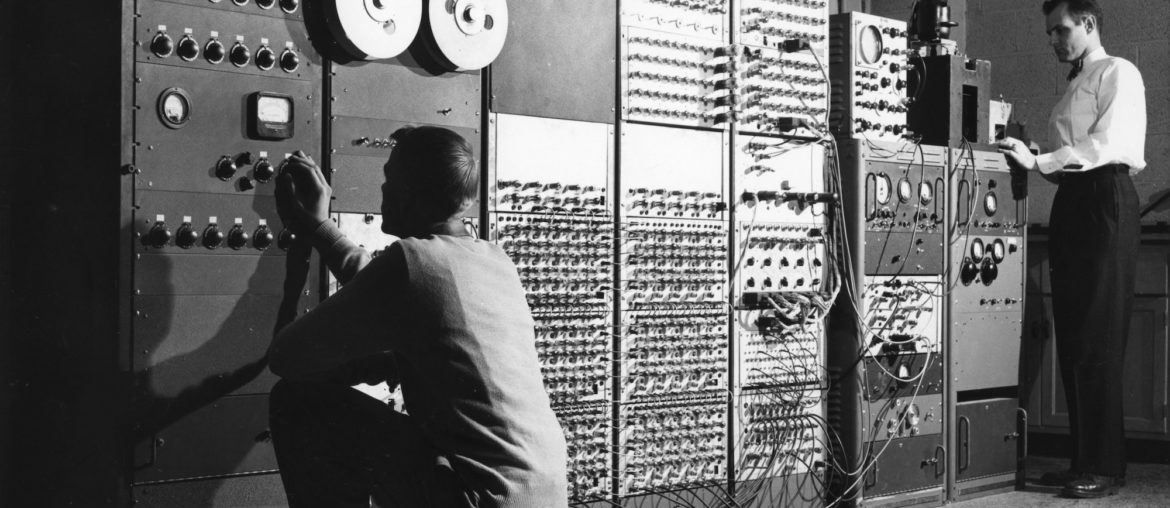

Over the last 70 years, the way we process and interpret digital data has vastly changed. From binary data stored on magnetic drums through to tabular records of the ’70s, stored in the structure of a hierarchical model – what is known today as a Database.

Image via Proof Point

In the 80’s data evolved to include unstructured data in the form of BLOB’s, a term coined by the inventor of InterBase, Jim Starkey when he was referring to Basic Large Objects

Since then, it has become even more complex, being conveyed in various ways and formats, and stored on and in varied sources.

Today’s data can be stored and transported in some of the following ways:

- Streaming services – delivering Data, Video and Audio over the internet (sometimes referred to as triple play)

- Radio frequencies – WiFi and Bluetooth and their derivatives

- On the same line as electrical signals

- Via modulated light rays (called LiFi) at speeds of up to 100 Gbit/s – which is ideal for environments where transmitting data over radio waves is not practical or effective

- Data over sound is ideal for IoT devices to stay connected where WiFi is not practical, and

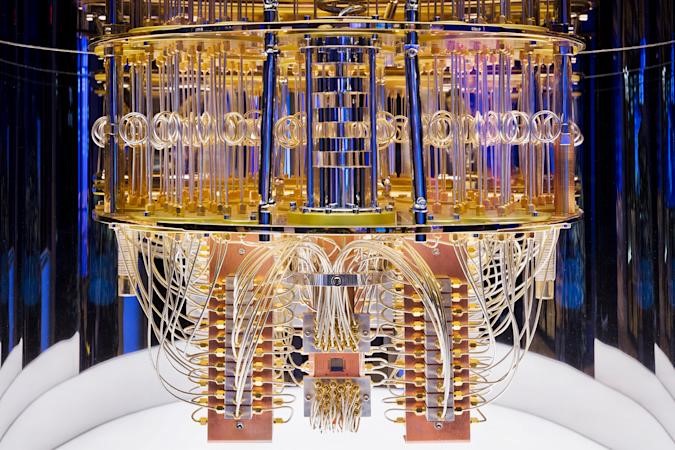

- At some near-future point; via quantum entanglement which will allow physically separated devices to instantly share data

- ‘Quantum Entanglement may provide us with new ways to work with data that were once thought impossible. With the future of Quantum Computing, we will attempt to answer questions that required so much data we could never have processed before and by combining it with QE; we can do that in more efficient and secure ways.’

Image via IBM Research, Flickr

Challenges

The challenge with data is that due to the varied formats and its various access methods, it can be time-consuming to extract in a way that will yield value.

Data isn’t always localized or from one source, so one must spend a lot of time and effort sorting, tagging, and abstracting it to try and make any sense of it and to build an interpretation which only then can be used to activate some form of response or provide insight.

As we watch the trend towards modernising data to allow it to be transitioned into the cloud, the related (generated) data is becoming more abstract due to containerization. The process to access as well as gather data must be considered, especially as the number of different data access API’s and protocols needs to be considered.

To add to the complexity, on top of the on-premises (traditional) data and Cloud data, we also must consider IoT data which is transmitted across the network via multiple different protocols.

Overcoming the challenges

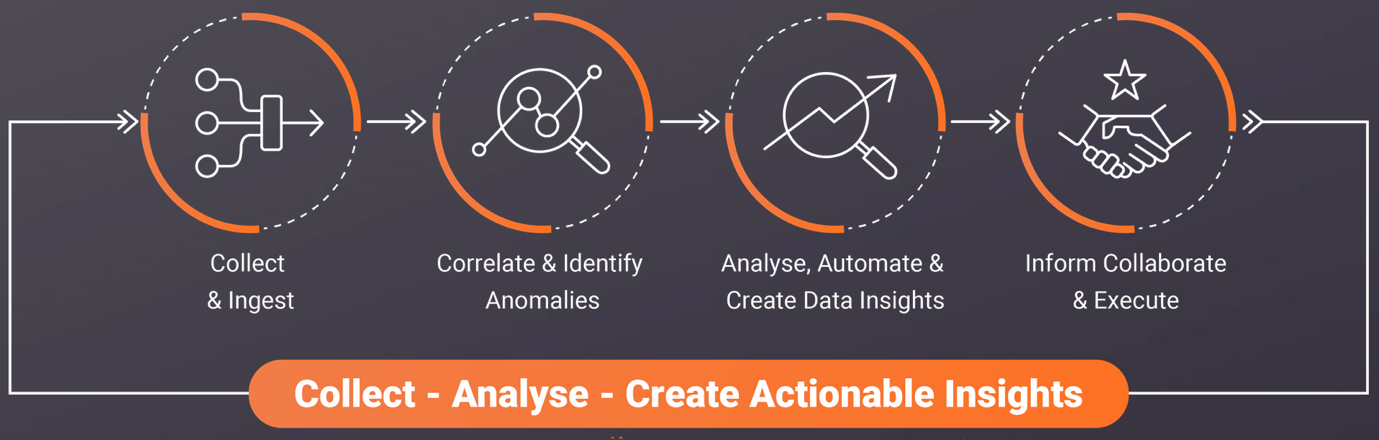

To tackle these challenges, at iMonitor we have developed the Intelligent Data Platform . A platform that is built around Machine Learning and Big Data and is designed to bring these two disparate worlds, legacy, and multi-cloud, together.

The Intelligent Data Platform is built to collect data from across a multi-cloud hybrid infrastructure and can ingest data (structured or unstructured) from any source, be it traditional IT, Operational IT (IoT), Mainframe, Storage, or business data.

As noted in the blog (Introducing iMonitor The Intelligent Data Platform), ‘if you have all the data you need in a single place it is much easier to make it accessible to the business and any third parties, you eliminate silo’s and create consistency as the data is cleansed and filtered according to business and consumer requirements.

As noted in the blog (Introducing iMonitor The Intelligent Data Platform), ‘if you have all the data you need in a single place it is much easier to make it accessible to the business and any third parties, you eliminate silo’s and create consistency as the data is cleansed and filtered according to business and consumer requirements.

In summary, the path of data from on-premises to the Cloud and how to find meaning in it is challenging.

The approaches to gathering data can be summarized by understanding the end goal, considering your available sources and sources that may not be obvious and by looking for how to extract relationships and meaning from that combined data to affect that end goal.

The fact that data is dispersed adds value and insight into the resulting interpretation and can help us see what is normally hidden, thus leading to providing useful output from that understanding.

In 2021 we are witnessing 2.5 quintillion bytes (that is 25 followed by 17 zeros) of data created each day. With data growing at its current pace, we are only going to see the number of zeros increase exponentially.

Look out for my next blog, where I will discuss how we can manage the large volumes of data now being created and what it looks like for the future.

Stuart Cook MBCS – iMonitor Consultant and Product Manager

For more information on the iMonitor data platform

why not sign up for our blogs or

visit our website at www.imonitor.ai